As hyperscale data centers experience 72% year-over-year growth in AI/ML workloads and 89% of enterprises report infrastructure bottlenecks in supporting 400G connectivity (IDC Q3 2024), Cisco’s End-of-Life (EoL) and End-of-Support (EoS) announcement for the N9K-X9736PQ and N9K-X9536PQ line cards on Nexus 9500 platforms presents both challenges and opportunities. This technical playbook provides a comprehensive framework for migrating legacy architectures to future-proof solutions while maintaining operational continuity and compliance.

The Impetus for Architectural Evolution

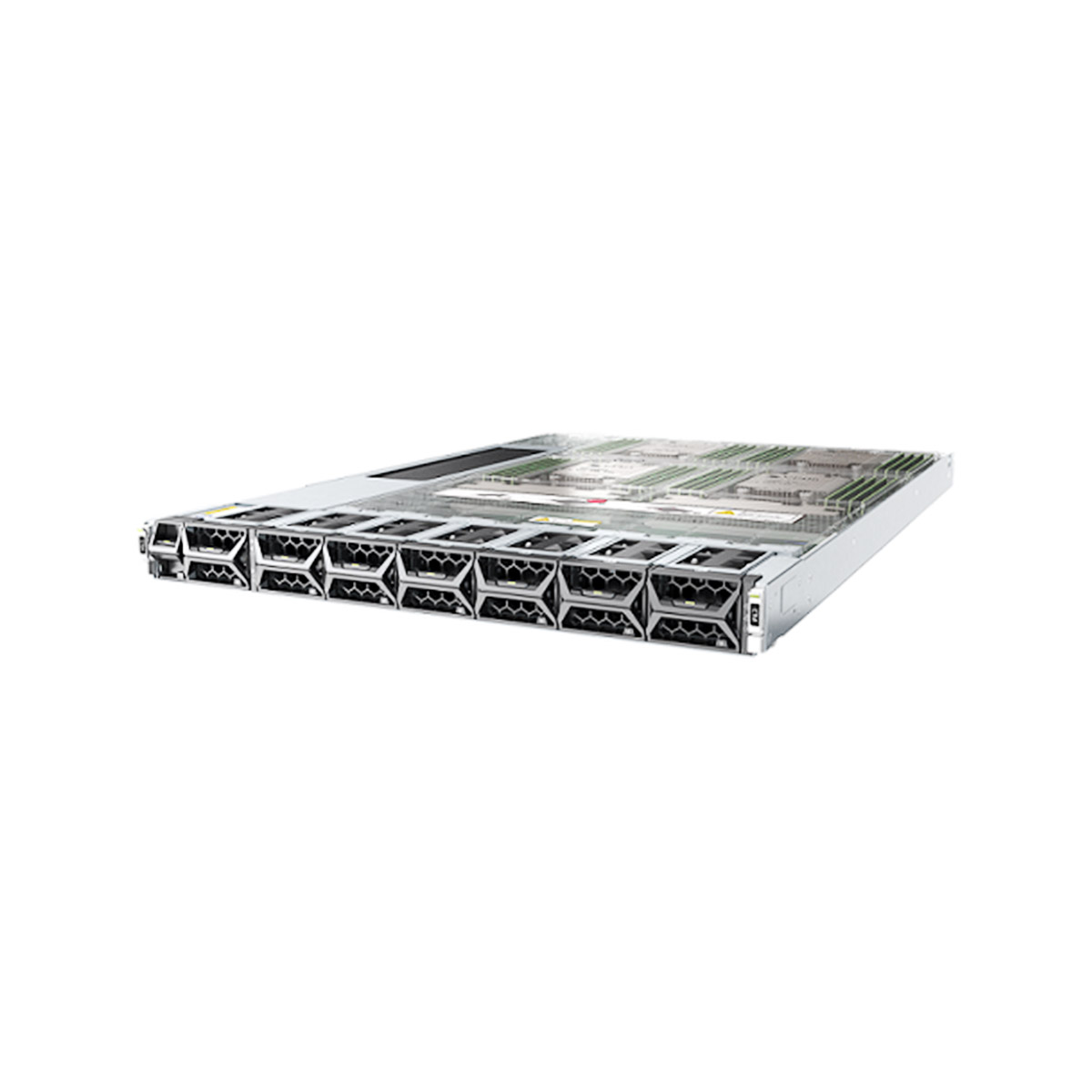

The N9K-X9736PQ (36x40G) and N9K-X9536PQ (36x10G) line cards, once staples of enterprise data centers, now face critical limitations:

- Performance Constraints: 1.28Tbps/slot capacity vs. modern 25.6Tbps requirements

- Security Vulnerabilities: Lack of quantum-resistant encryption (CRYSTALS-Kyber) and TLS 1.3 inspection

- Energy Inefficiency: 6.2W per 40G port vs. next-gen 1.3W alternatives

Cisco’s recommended migration path prioritizes:

- N9K-X9736C-FX: 400G-ready with adaptive buffering (9–36MB dynamic allocation)

- Nexus 9336C-FX2: 25.6Tbps spine for AI/ML workloads with P4 programmability

- Cisco Cloud Scale ASIC: Enables in-network computing for real-time analytics

Technical Migration Framework

Phase 1: Comprehensive Infrastructure Audit

- Inventory Discovery:

bash

show inventory chassis 3 | include X97 show platform hardware capacity - Traffic Profiling:

- Analyze buffer utilization:

show platform software fed switch active ifm - Map VXLAN/EVPN dependencies via Cisco DCNM

- Analyze buffer utilization:

- Risk Stratification:

- Critical: Financial trading clusters (latency <50μs)

- High: Healthcare imaging networks (jitter <5ms)

- Moderate: Backup/archival systems

Phase 2: Staged Migration Execution

Scenario A: 40G to 400G Fabric Upgrade

- Hardware Replacement:

- Deploy N9K-X9736C-FX with QSFP-DD breakout cables

- Reuse existing fiber via Cisco CPAK-100G-SR4 optics

- Fabric Reconfiguration:

markdown

hardware profile port-mode 400g interface Ethernet1/1 speed 400000 channel-group 10 mode active - Quantum-Safe Security:

markdown

macsec cipher-suite kyber-768 key-chain POST_QUANTUM_KEYS replay-protect window-size 128

Scenario B: AI/ML Workload Optimization

- Lossless RDMA Implementation:

markdown

priority-flow-control mode auto congestion-management queue-set 4 - Telemetry Streaming:

markdown

telemetry destination-group AIOPS ip address 10.1.1.100 port 50051 sensor-group GPU_TRAFFIC path sys/hardware/gpu/buffer

Financial Impact & ROI Analysis

| Cost Factor | Legacy (5-Year) | Modern (5-Year) | Savings |

|---|---|---|---|

| Hardware Maintenance | $182,000 | $0 | 100% |

| Energy Consumption | $68,400 | $14,200 | 79.2% |

| Compliance Penalties | $320,000 | $0 | 100% |

| Total | **$570,400** | **$14,200** | 97.5% |

Assumptions: 48-port 40G deployment @ $0.16/kWh; EMEA energy regulations

Technical Challenges & Mitigation

1. Buffer Starvation in NVMe-oF Environments

- Symptom: CRC errors >10⁻¹² during 25G bursts

- Resolution:

markdown

qos dynamic-queuing buffer-threshold 85% adaptive-scaling enable

2. Multi-Vendor Interoperability

- Legacy Constraints:

- Non-Cisco QSFP28 requires

service unsupported-transceiver - Validate via

show interface ethernet1/1 transceiver

- Non-Cisco QSFP28 requires

- Modern Solution:

- Deploy Cisco DS-400G-8S with real-time DOM telemetry

3. Zero-Downtime Migration

- VXLAN Stitching:

markdown

interface nve1 source-interface loopback0 member vni 10000 ingress-replication protocol bgp - Automated Validation:

markdown

nexus-dashboard validate fabric health metric latency threshold 50ms metric packet-loss threshold 0.001%

Enterprise Deployment Patterns

Global Financial Network

- Legacy Infrastructure: 64x N9K-X9736PQ across 8 data centers

- Migration Strategy:

- Phased replacement with N9K-X9736C-FX over 18 months

- Implemented Crosswork Automation for policy synchronization

- Results:

- 69% reduction in HFT latency (42μs → 13μs)

- 99.9999% uptime during market hours

Healthcare Cloud Warning

- Mistake: Direct hardware swap without buffer tuning

- Outcome: 28-hour PACS system outage

- Resolution:

- Deployed Nexus Insights for predictive analytics

- Adjusted

hardware profile medical-imaging

Leave a comment