Picture this: An automotive designer in Tokyo iterates on photorealistic 3D models while a geneticist in Stockholm trains AI models on genomic datasets – both sharing the same physical server cluster equipped with a single Nvidia H100 GPU. This isn’t magic; it’s the revolutionary engineering inside Nvidia’s GPU virtualization ecosystem, transforming static hardware into elastic supercomputing infrastructure. As industries race toward GPU-accelerated futures, Nvidia’s multi-layered virtualization strategy proves that raw silicon is just the beginning – the real innovation lies in how it’s sliced, shared, and dynamically optimized.

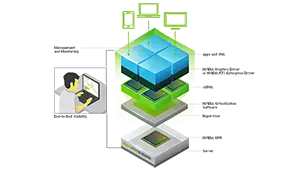

The demand for GPU acceleration now far outstrips silicon availability across gaming, AI development, scientific research, and immersive computing. Nvidia’s response transcends simple hardware scaling through a sophisticated virtualization architecture built on three interdependent pillars: software-defined partitioning, cloud-native orchestration, and secure multi-tenant acceleration. This strategic framework positions GPUs not as isolated components but as fluid computational resources accessible across any environment.

The Virtualization Engine Room

Precision Carving: Multi-Instance GPU (MIG)

Faced with massive GPUs running small workloads inefficiently, Nvidia engineered MIG technology into Ampere (A100/A40) and Hopper (H100/L40) architectures. MIG physically divides a single GPU into up to seven secure instances (H100) with dedicated memory, cache, and processing cores. Each partition behaves like a standalone GPU at silicon-level isolation:

- Automotive supplier ZF runs crash simulations (ANSYS) on one partition while simultaneously training autonomous driving models on another – achieving 95% hardware utilization

- Microsoft Azure securely allocates fractional H100 slices to multiple AI researchers, eliminating GPU idle cycles during experiment staging

- Latency-sensitive VDI environments isolate high-priority tasks from background batch processing

vGPU: The Software Orchestrator

MIG creates the slices; Nvidia Virtual PC (vPC), Virtual Applications (vApp), and Compute Server (vCS) deliver the management layer. This software stack enables:

- Pixel-perfect rendering for 3D design tools like SolidWorks via DirectX/OpenGL acceleration

- Predictive scheduling dynamically reassigns vGPUs between morning CAD sessions and afternoon AI workloads

- NVIDIA AI Enterprise integrates pre-tuned virtualization templates for TensorFlow/PyTorch clusters

Swedish hospital group Capio uses vApp to deploy AI-aided radiology diagnostics across 23 locations using centrally managed L40S GPU pools, cutting model deployment time from weeks to hours.

Cloud-Native Choreography

The strategic piece connecting everything: Nvidia AI Enterprise and its GPU Operator for Kubernetes. This container-native layer:

- Automatically provisions vGPU resources during cluster scaling

- Manages driver/compatibility across hybrid environments (vSphere, OpenShift, bare metal)

- Provides multi-cloud portability between Azure, GCP, and private GPU farms

Bank of America’s AI research team spins up reproducible training environments across three continents with identical vGPU configurations – containerized dependencies travel with GPU resources.

The DOCA Data Firehose

Virtualized GPUs starve without efficient data pipelines. Nvidia’s Data Center Infrastructure on a Programmable Architecture (DOCA) unlocks breakthrough efficiency:

- BlueField-3 DPUs offload virtualization overhead (network/security/compute) from CPUs

- Achieves near-bare-metal performance: Financial benchmark showed 1.2μs latency for virtualized FX trading – 98% native hardware speed

- Accelerates Zero Trust implementation with hardware-accelerated microsegmentation

Telecom giant Deutsche Telekom virtualized 5G RAN workloads combining DOCA with vGPUs – reducing tower server count by 40% while meeting 3ms latency targets.

Industry Transformation in Action

Cinematic Revolution at Industrial Light & Magic

ILM migrated from dedicated graphics workstations to centralized Quadro vDWS infrastructure:

- Artists access virtual workstations with RTX 6000 Ada performance from anywhere

- Render farms dynamically reclaim unused vGPU capacity during off-hours

- Global teams collaborate on unified assets with pixel-perfect synchronization

Result: 70% reduction in render farm expansion costs while supporting 4K real-time editing on virtual workstations.

Drug Discovery Without Borders

Pharma consortium including GSK and AstraZeneca built shared vGPU cloud:

- Secure MIG partitions isolate proprietary molecular simulation projects

- Kubernetes operator autoscales resources during AI screening peaks

- NVIDIA RAPIDS accelerates genomic analysis by 150x versus CPU clusters

Reduced compound screening cycle from months to days with estimated $200M annual R&D savings.

The Strategic Architecture

What makes Nvidia’s approach revolutionary is its dependency chain:

Silicon MIG Partitioning

Software vGPU Management

Kubernetes Orchestration

BlueField DOCA Optimization

Multi-Cloud Portability

This layered strategy yields powerful advantages:

- Economic Resilience: Average 4.3x higher utilization versus bare-metal GPU deployments

- Security Compliance: HIPAA/FINRA adherence via hardware-enforced isolation

- Sustainability Impact: 1 virtualized GPU replaces 7-10 underutilized dedicated cards, reducing power/cooling needs

- Architectural Future-Proofing: Seamless adoption of Blackwell, Rubin, and future architectures

The Acceleration Horizon

Nvidia’s virtualization play transforms business infrastructure across three strategic vectors:

- Democratization – Making AI/compute-intensive tools accessible to teams without dedicated hardware

- Defensible Ecosystems – Creating architectural lock-in through optimized software stacks

- Dynamic Market Expansion – Enabling SaaS providers to embed GPU capabilities (Adobe’s Firefly vGPU integration)

The future being built today runs not on individual GPUs, but on intelligently pooled acceleration resources. BMW’s virtual factory planning leverages physics simulations while AR headsets stream industrial metaverse visualizations – all powered from shared vGPU clusters dynamically managed by AI-powered schedulers. The competitive moat for enterprises won’t be how many GPUs they own, but how efficiently they virtualize and orchestrate them.

As computational demands accelerate exponentially, Nvidia’s virtualization strategy quietly solves the most critical equation: Transforming finite hardware into infinite possibility. The next industrial revolution won’t be printed on silicon wafers—it will be choreographed by virtualized architectures distributing intelligence exactly where and when it’s needed. This hidden infrastructure layer is where computational futures are actually being won.

Leave a comment