When you’re evaluating network switches for your business infrastructure, it’s easy to get lost in a sea of technical jargon. Terms like line rate, wire speed, and non-blocking are often tossed around in product specs, but they represent fundamentally different aspects of performance that can make or break your network’s reliability. For IT managers, network engineers, and procurement specialists, understanding these distinctions isn’t just academic—it’s essential for avoiding costly bottlenecks, ensuring seamless operations, and future-proofing your investment. A switch that boasts high line rate but fails to deliver wire speed under load could cripple your data center during peak traffic. Similarly, a non-blocking architecture is what separates enterprise-grade equipment from consumer-level hardware, enabling true simultaneous communication across all ports. This article breaks down these critical concepts in practical terms, helping you cut through the marketing hype and make informed decisions that align with your actual network demands, whether you’re deploying a small office setup or a sprawling data center.

Breaking Down Line Rate: The Theoretical Maximum

Understanding the Basics of Line Rate

Line rate refers to the raw, maximum speed that a physical port on a switch can theoretically achieve, measured in gigabits per second (Gbps). It’s the baseline number you see in product descriptions—like 10G, 25G, or 100G—indicating the port’s inherent capacity without any external factors. However, this figure alone doesn’t tell the whole story. Think of it as the speed limit on a highway: it sets the upper bound, but actual travel speed depends on traffic conditions, road design, and vehicle performance. In switch terms, line rate is determined by the physical hardware, such as the port’s circuitry and supported Ethernet standards, but real-world throughput can be lower due to internal limitations.

Why Line Rate Doesn’t Always Translate to Real Performance

Several factors can prevent a switch from consistently hitting its line rate. The switch’s internal architecture plays a huge role; if the backplane—the internal pathway connecting ports—lacks sufficient bandwidth, data packets get congested, much like cars bottlenecked at a narrow tunnel. Buffer size is another critical element. Switches use buffers to temporarily store packets during traffic bursts, but if buffers are too small or poorly managed, packets are dropped under pressure, leading to retransmissions and latency. Additionally, packet processing capabilities matter. Advanced features like deep packet inspection, access control lists, or QoS prioritization require computational power. If the switch’s CPU or ASICs can’t keep up, forwarding speeds drop below the line rate. For example, a 10G port might handle large, simple packets at full speed but struggle with smaller, complex packets that require more processing. This is why datasheets often include fine print about throughput under specific conditions, emphasizing that line rate is a peak value, not a guaranteed constant.

Practical Implications for Network Design

When selecting switches, don’t take line rate at face value. Consider your traffic patterns. If your network handles mostly large, sequential data flows (like video streaming or backups), line rate might be more achievable. But for environments with small, random packets (such as VoIP or transactional databases), processing overhead can significantly reduce effective speeds. Always review independent benchmarks or vendor-provided testing results that simulate real-world scenarios. This helps avoid surprises after deployment, ensuring the switch meets your actual bandwidth needs rather than just theoretical specs.

Wire Speed: The Benchmark for Real-World Forwarding

Defining Wire Speed and Its Importance

Wire speed takes the concept of line rate a step further by focusing on the switch’s ability to actually forward packets at the full line rate of its ports without delays or drops. It’s a measure of forwarding performance, indicating that the switch can handle the maximum data flow the port is capable of, in practice. This is crucial for maintaining low latency and high reliability, especially in time-sensitive applications. At Layer 2, wire speed means MAC address switching happens instantaneously, which is vital for environments like financial trading floors or real-time collaboration tools where microseconds matter. At Layer 3, it ensures IP routing doesn’t introduce slowdowns, keeping subnets and VLANs communicating efficiently even under heavy load. A switch that supports wire speed on all ports guarantees that each connection operates at its advertised capacity, preventing individual links from becoming weak points in your network.

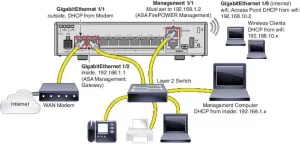

How Wire Speed Works in Multi-Port Scenarios

Wire speed becomes particularly important when evaluating switches with multiple ports. For instance, a switch with 48×10G ports might claim wire speed capability, but this means each port can independently forward at 10Gbps—not necessarily that all ports can do so simultaneously. To achieve true wire speed across all ports, the switch’s internal switching fabric must support the aggregate bandwidth. In this case, that’s 480Gbps (48 ports × 10Gbps). If the internal capacity is lower, congestion occurs when multiple ports are active, leading to packet loss or increased latency. This is why high-end switches often advertise their aggregate throughput alongside port speeds. When testing wire speed, look for metrics like forwarding rate in packets per second (PPS) for different packet sizes, as smaller packets require more processing and can reveal weaknesses in the switch’s architecture.

Applications Where Wire Speed is Non-Negotiable

In critical infrastructure, wire speed isn’t a luxury—it’s a requirement. Data centers running virtualized environments need wire speed to ensure VM migrations and storage traffic don’t hit bottlenecks. Enterprises with VoIP or unified communications systems rely on it to maintain call quality and avoid jitter. Even in industrial IoT settings, where sensors generate constant data streams, wire speed prevents backlog and ensures real-time monitoring. When evaluating switches, prioritize models that explicitly guarantee wire speed performance across all layers, and verify this with real-world testing or case studies from similar deployments.

Non-Blocking Architecture: Ensuring Uninterrupted Performance

What Makes a Switch Non-Blocking?

A non-blocking switch architecture ensures that every port can communicate with every other port at full line rate simultaneously, without any internal congestion or contention. This requires the internal backplane or switching fabric to have at least as much bandwidth as the sum of all port capacities. For example, a switch with 24×25G ports would need a minimum of 600Gbps of internal bandwidth to be non-blocking. This design is fundamental for environments where multiple devices are transmitting data at once, such as in high-density server racks, cloud computing nodes, or multimedia editing studios. Unlike wire speed, which focuses on per-port performance, non-blocking addresses the holistic capability of the switch to handle all-to-all traffic patterns at peak rates.

The Difference Between Non-Blocking and Wire Speed

While related, non-blocking and wire speed are distinct concepts. Wire speed ensures that individual ports can forward at their maximum rate, but non-blocking guarantees that this happens concurrently across all ports. A switch might support wire speed on each port but not be non-blocking if its internal fabric can’t sustain the aggregate load. Imagine a highway where each lane has a high speed limit (wire speed), but interchanges are too narrow, causing jams when many cars merge (non-blocking failure). In networking terms, this means that during peak usage—like end-of-day backups or video rendering—a non-blocking switch maintains performance, while a blocking one might slow down or drop packets. This is why non-blocking is often associated with higher-end, enterprise-class switches designed for mission-critical applications.

Why Non-Blocking Matters in Modern Networks

As networks evolve to support bandwidth-intensive applications like AI workloads, 4K/8K video streaming, and real-time analytics, non-blocking architecture becomes increasingly important. It prevents internal bottlenecks that can undermine investments in high-speed ports. For businesses scaling their infrastructure, non-blocking switches offer better ROI by ensuring that all purchased bandwidth is usable simultaneously, without degradation. When selecting a switch, check vendor specifications for non-blocking ratings, often expressed as a ratio (e.g., 1:1 non-blocking), indicating that the internal bandwidth matches the total port capacity. This is especially critical for top-of-rack switches in data centers or core switches in enterprise networks, where traffic patterns are unpredictable and high.

Putting It All Together: Choosing the Right Switch

How These Concepts Interrelate in Real-World Scenarios

In practice, line rate, wire speed, and non-blocking work together to define a switch’s overall capability. Line rate sets the upper bound for each port, wire speed ensures that bound is achievable under load, and non-blocking guarantees it’s maintainable across all ports simultaneously. For example, in a video production studio editing 4K footage, switches need high line rate ports to handle large files, wire speed to process frames without delay, and non-blocking architecture to allow multiple editors accessing shared storage concurrently. Ignoring any one aspect could lead to choppy playback, missed deadlines, or wasted bandwidth.

Key Considerations for Your Deployment

When evaluating switches, start by assessing your traffic profiles. For light, intermittent traffic—like in a small office—a basic switch with high line rate might suffice. But for data-heavy environments, prioritize wire speed and non-blocking. Look beyond datasheet claims: request throughput tests from vendors, especially for mixed packet sizes and full load conditions. Additionally, consider scalability; a non-blocking switch might cost more upfront but prevent upgrades later as traffic grows. Brands like telecomate.com offer switches that emphasize these features, providing detailed performance metrics to help you match hardware to needs without overprovisioning.

The Role of Software and Management

Hardware specs alone aren’t enough; software optimization plays a big role in achieving advertised performance. Features like efficient queue management, adaptive routing, and hardware acceleration can enhance wire speed and non-blocking behavior. When comparing options, explore the switch’s OS capabilities—such as support for advanced QoS or traffic shaping—which can help maintain performance under varied conditions.

In summary, understanding line rate, wire speed, and non-blocking is essential for anyone responsible for network infrastructure. These terms aren’t just marketing fluff; they represent tangible performance characteristics that directly impact reliability, latency, and scalability. By focusing on real-world needs rather than theoretical maximums, you can select switches that deliver consistent performance, avoid bottlenecks, and support your business objectives. Whether you’re upgrading an existing network or designing from scratch, prioritizing these aspects will ensure your investment pays off in smoother operations and future-ready capacity. For tailored solutions that balance these factors, exploring offerings from telecomate.com can provide a solid starting point, backed by performance data and industry expertise.

Leave a comment