Introduction

The H3C S9850-32H is a purpose-built, straightforward 32-port 100G QSFP28 switch. It excels in environments that prioritize simplicity, using a repeatable “building block” for spine or aggregation layers, and a deployment model that scales by replicating proven designs rather than engineering each rack from scratch.

Notably, it comes fully equipped for contemporary data center demands, supporting key technologies like VXLAN, MP-BGP EVPN, FCoE, PFC/ETS/DCBX, DRNI (M-LAG), advanced telemetry (INT), and capabilities optimized for RoCE.

Consider this switch if your primary goal for the next 12-24 months is scaling out (adding more pods) with a stable 100G fabric, rather than immediately upgrading to 400G uplinks everywhere. Look elsewhere if your design specifically calls for extremely high-density 100G leaf switching with native 400G uplinks—that’s a different category of hardware.

Quick Decision Guide

You’re a good candidate for the S9850-32H if you agree with at least two of the following:

- I need a straightforward 32-port 100G (40/100G) QSFP28 unit for a spine or aggregation layer.

- My 100G ports must be flexible (40/100G auto-sensing with breakout capability) to accommodate real-world migration schedules and rack variations.

- I require modern data center features (VXLAN+EVPN, DRNI, PFC/ETS/DCBX, INT telemetry) without switching to an entirely different platform family.

If the first point doesn’t apply and you instead need “48+ 100G ports with 400G uplinks,” you are likely considering the wrong class of switch for this role.

What This Review Covers

This is a practical, role-oriented review for network architects, engineers, and buyers focused on deployment. It aims to answer:

- Where the S9850-32H best fits within a 2026 spine-leaf fabric.

- The practical implications of its port design and breakout capabilities.

- Its readiness for features like EVPN/VXLAN, storage, and AI-style traffic patterns.

- A practical deployment playbook and RFQ guidance to avoid surprises.

This is not a synthetic benchmark report. In production environments, operational stability and design repeatability often outweigh theoretical peak performance numbers.

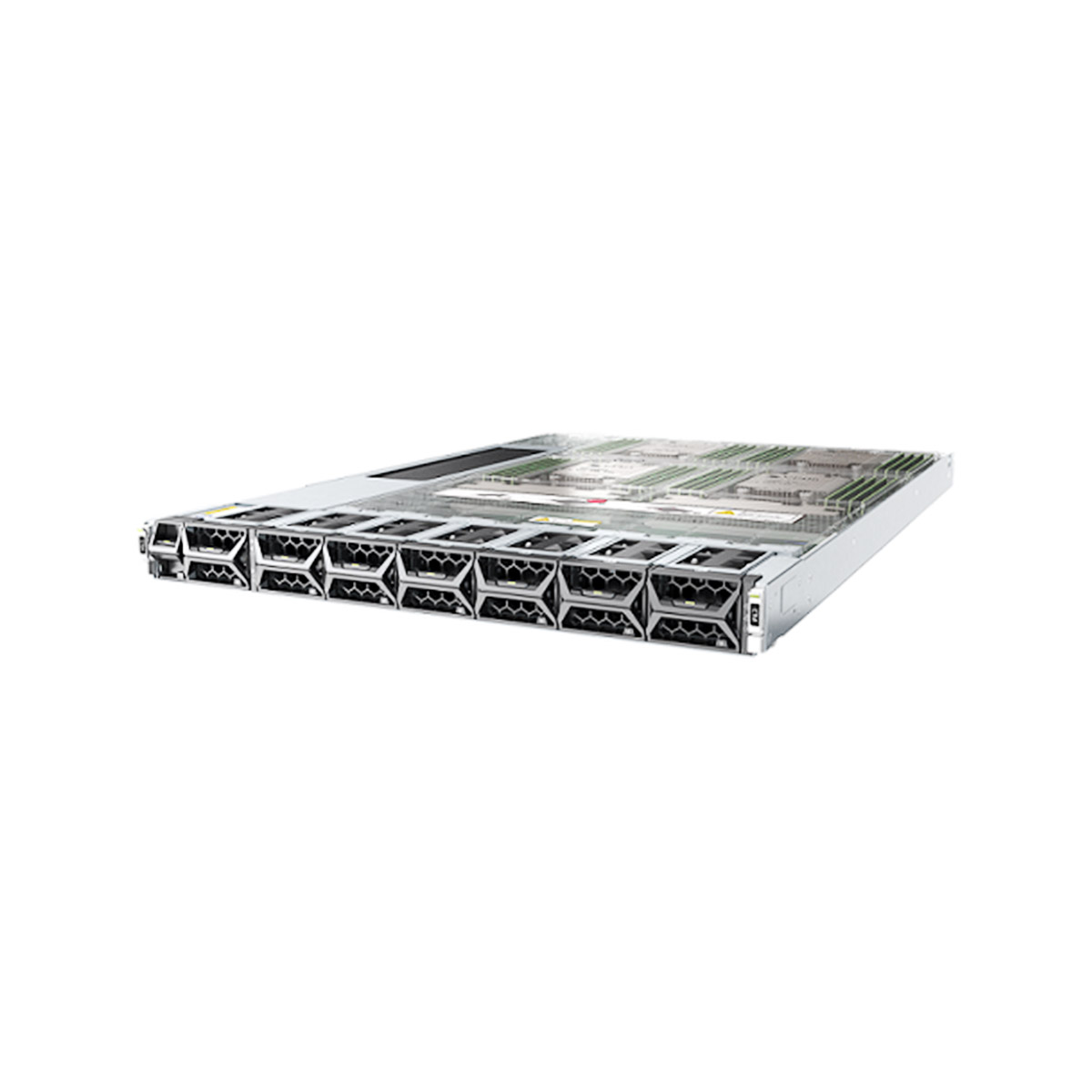

Hardware Snapshot

Table 1: S9850-32H Key Hardware Specifications

| Item | Details | Why It Matters |

|---|---|---|

| High-Speed Ports | 32 x 100G QSFP28 (100G/40G auto-sensing); each port can split into 4x 25G or 10G interfaces. | Enables a standardized 100G “brick” while handling migration and varying rack requirements. |

| Management / OOB | 2 x 1G SFP ports; 2 dedicated out-of-band management ports; mini USB console; USB port. | OOB design directly impacts operational safety and ease of troubleshooting. |

| Cooling Flexibility | Field-changeable airflow direction via selectable fan trays. | Aligns with your data center’s hot/cold aisle configuration (front-to-back or back-to-front). |

| Power & Redundancy | Uses removable 650W AC or DC power modules; supports 1+1 power redundancy. | Ensures predictable high-availability; prevents late-stage procurement errors. |

| Forwarding Capacity | H3C positions the series with up to 6.4 Tbps forwarding capacity. | Places it firmly in the mainstream “32x100G” data center switch class. |

| Performance Reference | H3C catalog lists 6.4 Tbps and 2024 Mpps. | Useful for comparing equivalent switch tiers during procurement. |

In practice, the S9850-32H is best viewed as a repeatable 100G building block—ideal for spine/aggregation layers and small-to-midsize pods where 32x100G provides the cleanest design math.

Role in a 2026 Spine-Leaf Fabric

Role A: The 100G “Spine Brick”

For two-tier fabrics where spines provide predictable ECMP paths and leaves scale by adding racks, a 32x100G spine is often the simplest, most stable choice. The S9850-32H matches this intent perfectly, keeping pod design compact and repeatable with flexible port options to reduce rack-specific exceptions.

Role B: Aggregation / High-Speed Interconnect Layer

In enterprise data centers (private cloud, virtualization clusters, storage-heavy east-west traffic), a layer is needed to consolidate traffic and provide operational guardrails. The S9850-32H supports this with VXLAN, MP-BGP EVPN, FCoE, and redundancy via DRNI (M-LAG).

Role C: Leaf/ToR in Specific Cases

H3C notes the series can operate as a Top-of-Rack switch. The decision hinges on density economics: it works well for 100G-heavy racks that aren’t ultra-dense. For extremely dense 100G access with built-in higher-speed uplinks, a different leaf-tier switch is typically more suitable.

Ideal Deployment Scenarios

Scenario 1: The “Clean 100G Pod” with Predictable Growth

- Goal: Build a stable, replicable fabric template for private cloud or enterprise DCs.

- Why it Fits: Offers a clean 32x100G block with modern features (VXLAN+EVPN) and redundancy via DRNI.

- Tip: Standardize the pod template first; avoid customizing every individual rack.

Scenario 2: Managing Mixed 40G/100G Migration

- Goal: Handle legacy 40G racks, refreshed 100G racks, and special-purpose islands simultaneously.

- Why it Fits: Ports are 100G/40G auto-sensing and can break out to 4x25G/10G, enabling phased upgrades.

- Tip: Document a clear breakout policy (what’s allowed, forbidden, and how it scales) from the start.

Scenario 3: Storage-Heavy East-West Traffic

- Goal: Prevent performance issues during storage rebuilds or traffic bursts that cause tail latency spikes.

- Why it Fits: Offers strong visibility tools like INT telemetry, sFlow, NetStream, and real-time buffer/queue monitoring.

- Tip: Make this observability a core part of the acceptance criteria, not an add-on after the first incident.

Scenario 4: “Operations-First” Environments

- Goal: Support frequent, safe change windows with high resiliency and observability.

- Why it Fits: Supports automated O&M alignment, multiple monitoring methods, and DRNI for streamlined upgrades with minimal traffic impact.

- Tip: Practice and document rollback procedures, not just upgrade steps.

Feature Review for Modern Networks

EVPN-VXLAN Readiness

The S9850 series supports VXLAN with MP-BGP EVPN as a control plane, simplifying configuration and reducing flooding.

- Use it when you need scalable L2 segmentation, multi-tenancy, or a cleaner control plane.

- Skip it for now if your environment is stable, single-tenant, and already uses L3-to-the-rack.

Lossless Ethernet & RoCE

The platform supports PFC, ETS, and DCBX for low-latency, zero-packet-loss services like FC storage and RDMA (RoCE).

- 2026 Reality Check: Lossless designs add value but require careful configuration and validation. Treat this as a staged project, not a simple checkbox.

DRNI (M-LAG) for Availability

DRNI provides device-level link aggregation and redundancy, enabling server dual-homing without complex STP configurations. It simplifies topology and supports independent member upgrades.

Visibility & Telemetry

Advanced monitoring is a key differentiator. H3C highlights:

- INT telemetry for detailed timestamp/port/buffer data.

- sFlow, NetStream, and SPAN/RSPAN/ERSPAN.

- Real-time buffer and queue monitoring.

For AI, storage, or dynamic environments, this visibility is crucial for rapid problem resolution.

Real-World Performance Considerations

Beyond the 6.4 Tbps capacity, operational experience depends on handling:

- Microbursts causing queue pressure.

- Elephant flows dominating paths.

- ECMP hashing imbalances.

- Effective congestion detection and response.

Common Performance Symptoms & Checks

| Symptom | Likely Cause | First Checks |

|---|---|---|

| Tail latency spikes at peak | Microbursts / queue pressure | Check real-time queue visibility; identify congestion points. |

| One uplink consistently overloaded | ECMP/LAG hashing imbalance | Verify flow distribution and hashing policy consistency. |

| “Random” packet loss under load | Buffer exhaustion, misconfigured QoS/DCB | Inspect drop counters, queue thresholds; validate PFC/ETS config. |

| Service interruption during upgrades | Lack of safe change process | Utilize DRNI and practice member-by-member upgrade strategies. |

| Hard-to-diagnose incidents | Lack of telemetry | Enable INT/flow tools early and establish a performance baseline. |

Strengths & Limitations

Strengths

- Clean, flexible 32x100G QSFP28 building block with excellent breakout options.

- Strong data center feature set: VXLAN/EVPN, FCoE, PFC/ETS/DCBX, DRNI.

- Operational focus on visibility (INT, sFlow, queue monitoring).

- Practical design: field-changeable airflow, modular PSUs/fans, dedicated OOB ports.

Limitations / Trade-offs

- Not designed for maximum 100G leaf density plus native 400G uplinks.

- Breakout flexibility is powerful but requires a clear policy to avoid cabling and spare part complexity.

- Enabling all advanced features (overlay, lossless) on day one increases risk. An incremental, validated rollout is essential.

Deployment Recommendations

Design Principles

- Standardize pod templates (ports, uplinks, breakout rules, spares) before scaling.

- Upgrade shared bottlenecks (uplinks/spines) before refreshing every rack.

- For EVPN/VXLAN, define and consistently maintain gateway placement (ToR vs. border leaf).

- For PFC/ETS, treat it as a production rollout: validate, stage, and measure.

Cabling & Optics Planning

- Define distance tiers (in-rack, row, room, inter-room).

- Select optics for each tier.

- Establish breakout rules and labeling conventions.

- Thenfinalize your fiber patch cables and spare parts.

This prevents the common last-minute issue of switches arriving without compatible optics or cables.

Cross-Brand Alternatives (32x100G Class)

| Brand | Comparable Model | Published “Class” Highlights | Practical Note |

|---|---|---|---|

| H3C | S9850-32H | 32x100G QSFP28; VXLAN+EVPN; PFC/ETS/DCBX; DRNI; INT. | Strong DC feature stack with a focus on operational visibility. |

| Huawei | CloudEngine 8850E-32CQ-EI | 32x100GE QSFP28; 6.4 Tbps; VXLAN+EVPN mentioned. | A solid reference point in the “32x100G spine/agg” tier. |

| Cisco | Nexus 9332C | 32×40/100G QSFP28; 6.4 Tbps; breakout not supported. | The lack of breakout support may force a different cabling strategy. |

| Ruijie | RG-S6510-32CQ | 32x100GE QSFP28; highlights 32MB buffer; M-LAG. | Strong messaging around handling traffic bursts and lossless designs. |

Frequently Asked Questions (FAQs)

Q1: Is the S9850-32H best as a spine or leaf switch?

A: It is most commonly and effectively deployed as a 100G spine or aggregation building block due to its clean port count. It can serve as a leaf in smaller pods, but high-density 100G leaf requirements may justify a different switch tier.

Q2: Does it support EVPN-VXLAN?

A: Yes. H3C positions the S9850 series as supporting VXLAN with MP-BGP EVPN as a control plane.

Q3: Can the 100G ports be split?

A: Yes. Ports are auto-sensing (100G/40G) and each can be split into four 25G or 10G interfaces. A clear breakout policy is recommended.

Q4: Do I need lossless Ethernet (PFC) from day one for AI/storage?

A: Not necessarily. These features help but require careful configuration. Implement them as a staged, validated rollout rather than enabling everywhere immediately.

Q5: What lossless features are supported?

A: H3C lists support for PFC, ETS, and DCBX for low-latency, zero-packet-loss services like FC storage and RDMA.

Q6: Is FCoE supported?

A: Yes, FCoE is explicitly listed in the S9850 series feature set.

Q7: What is DRNI (M-LAG) used for?

A: DRNI provides device-level redundancy and link aggregation for dual-homed servers, simplifying topology and supporting independent member upgrades.

Q8: What visibility options are available?

A: H3C highlights INT telemetry, sFlow, NetStream, SPAN/RSPAN/ERSPAN, and real-time buffer/queue monitoring.

Q9: What causes “random slowness” in 100G deployments?

A: Often microbursts, ECMP hashing imbalance, or lack of visibility. Implement queue/buffer monitoring as part of initial acceptance.

Q10: How should I plan airflow and power?

A: Select fan trays for your aisle airflow (front-to-back/back-to-front). Choose and standardize on either 650W AC or DC PSUs early to avoid procurement delays.

Q11: How does it compare to the Cisco Nexus 9332C?

A: Both are 32x100G spine-class switches. A key difference is that the Cisco 9332C does not support breakout cables, which may influence your cabling plan.

Q12: What is a comparable Huawei model?

A: The Huawei CloudEngine 8850E-32CQ-EI is a common reference in the 32x100GE, 6.4 Tbps class.

Q13: What is a comparable Ruijie model?

A: Ruijie’s RG-S6510-32CQ is positioned as a 32x100GE data center switch with a focus on buffer and redundancy.

Q14: What should be included in a quote?

A: Request a complete Bill of Materials: transceivers, breakout cables, fiber patches (by distance), spare PSUs/fans/optics, and deployment guidance if needed.

Q15: What’s a safe upgrade strategy for 2026?

A: First, build and instrument a repeatable 100G fabric template with full telemetry. Scale by copying this template. Consider speed upgrades (to 400G/800G) first at the shared bottleneck layers (e.g., spine).

Conclusion

If your 2026 strategy involves a repeatable 100G pod architecture with modern overlay capabilities and strong operational visibility, the H3C S9850-32H is a high-confidence choice. Its value is fully realized when flexible port use, redundancy planning, and comprehensive telemetry are treated as foundational elements of a standardized template, not as afterthoughts.

Leave a comment