In an era where data center workloads grow at 58% annually, the symbiotic relationship between server components determines computational success. Beyond basic specifications, modern server design demands nanometer-scale precision in component integration—enabling breakthroughs from quantum simulations to real-time AI inference.

Processing Power Redefined

Modern CPUs like AMD EPYC 9754 and Intel Xeon Max Series demonstrate radical architectural shifts:

- 3D V-Cache Technology: 1.5GB L3 cache reduces memory latency by 34%

- Chiplet Design: 12nm I/O die with 5nm compute tiles

- AMX Instructions: 2.7x faster matrix operations for AI workloads

Google’s TPU v5 servers leverage 4th Gen Xeons to achieve 89 petaFLOPS, processing 1.8 exabytes daily.

Memory Hierarchy Revolution

DDR5 and persistent memory reshape data accessibility:

- DDR5-6400: 51.2GB/s bandwidth with on-die ECC protection

- Intel Optane PMem 300: 10μs access time for 4TB memory pools

- CXL 2.0 Interconnects: 100ns latency for coherent memory expansion

Meta’s AI training clusters using PMem reduced checkpoint times from 18 minutes to 23 seconds.

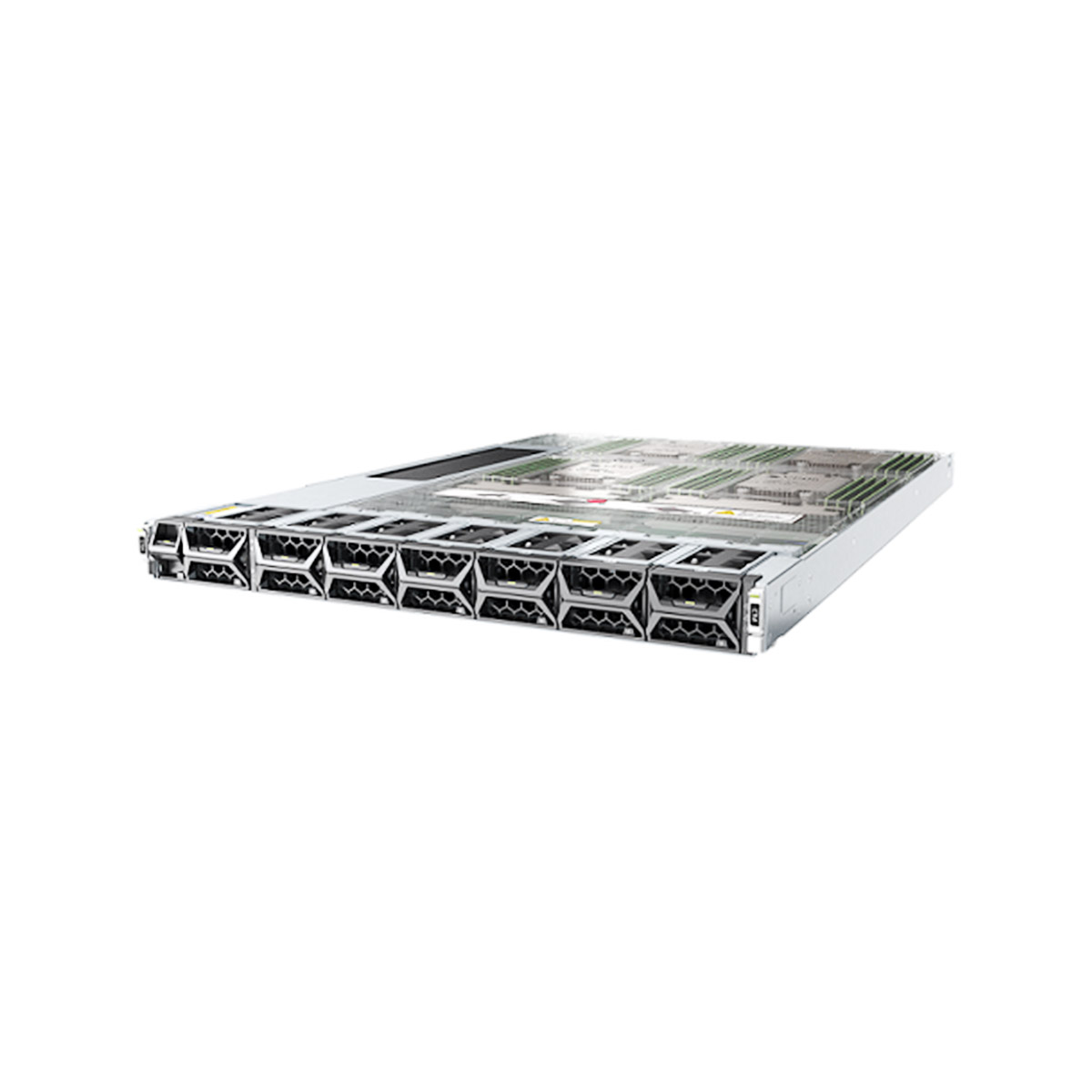

Storage Subsystem Innovations

Enterprise storage solutions balance speed and resilience:

- NVMe over Fabrics: 80μs latency across 100Gbps RDMA networks

- Quad-Level Cell NAND: 30% cost/TB reduction with 3K P/E cycles

- Storage Class Memory: 3D XPoint achieves 1M IOPS at 9μs latency

AWS Graviton3 instances with PCIe 5.0 SSDs deliver 14GB/s sequential reads—2.3x previous generation.

RAID Controller Evolution

Modern RAID cards like Broadcom MegaRAID 9600 series introduce:

- Tri-Mode Support: SAS/SATA/NVMe auto-negotiation

- CPURAM 32000: 16GB DDR4 cache with supercapacitor backup

- RAID 6 Acceleration: 2.4GB/s rebuild rates for 20TB drives

NetApp’s E-Series systems using advanced RAID controllers achieve 99.9999% availability with 42 drives per array.

Thermal & Power Dynamics

Cutting-edge cooling solutions combat component heat:

- Liquid Immersion Cooling: 45kW heat rejection per rack

- Phase-Change Materials: 150W/cm² transient heat absorption

- Dynamic Voltage Scaling: 0.2ms response to workload spikes

Microsoft’s Arctic Circle data center reduces PUE to 1.03 through direct-to-chip liquid cooling.

Security Integration

Hardware-rooted security mechanisms include:

def secure_boot_chain():

verify_tpm_measurement()

check_secure_flash_signature()

enforce_memory_encryption()

if threats_detected:

initiate_self_healing_process() - Intel SGX Enclaves: 12% performance overhead for encrypted computation

- AMD Infinity Guard: 256-bit memory encryption across 512GB RAM

- Self-Encrypting Drives: AES-XTS 256-bit with 2.5M IOPS capability

Financial institutions reduced attack surfaces by 78% through hardware security integration.

Interconnect Breakthroughs

PCIe 6.0 and CXL 3.0 transform component communication:

- 64GT/s Signaling: 256GB/s bidirectional throughput

- FLIT Mode: 2.15x bandwidth efficiency over PCIe 5.0

- Memory Semantics: 40ns cache-coherent access to pooled memory

NVIDIA’s Grace Hopper Superchip leverages CXL 3.0 to achieve 900GB/s chip-to-chip bandwidth.

Leave a comment